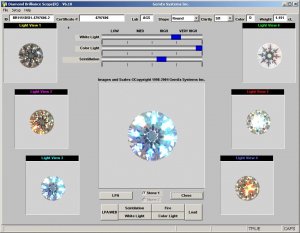

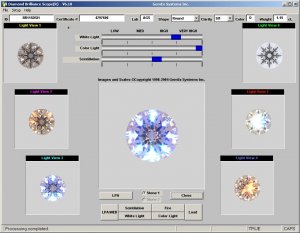

I have data on how different brands result in the B Scope.

I have imaged many Eightstars, A Cut Aboves, Superb Certs and lots more branded and unbranded ones.

However, to release test results that I have done for consumers, would be a breach of confidentiality.

I can say this..

The Brilliance Scope is very repeatable. Yes, there is variation, but not much.

When a seller provides a B Scope and the consumer wants it verified, about 99% of the time the results are very close.

I have had a few occasions where there is a variance. When this happens, the information is transmitted to Gemex, and they immediately resolve the situation.

In one instance, the variance proved that either my B Scope or the sellers B Scope had a problem.

Gemex traveled to me to go over my machine, and also traveled personally and checked out the other machine. It was concluded that the other machine had a problem with the filters in it, and they of course corrected the situation.

I don''t know of any equipment company that makes such a concerted effort to keep things straight and accurate in the manner that Gemex does.

If there are changes that are observed the software is updated. Due to improvements in cutting and facet design, ignoring improvements would be negligent. Gemex is intensely supportive of the results the Bscope provides.

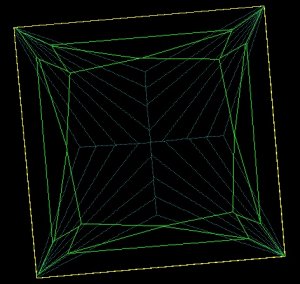

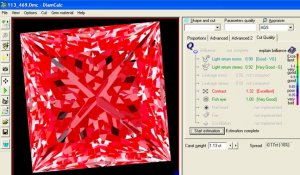

I am getting a stone this week, that is being sent by Gemex for research purposes. The results of this stone I will have permission to post, as it gets a very unusal result, even though the stone doesn''t have the "classic ideal proportions". This stone has been tested in various Bscope machines and appears to "break all the formerly assumed rules". The question is WHY? Gemex wants to know, and certainly I am also interested intensely as to the facts of why this is happening. Is this the exception to what we''ve learned so far? Is it that these proportions will consistently yield such incredible results? Can the WHY really be answered? I will have the stone next week and will begin an intensely made study on it. The results of this can be reported to the public.

Further opinion expressed by some sellers is not current. The camera, computer board, software, laser alignment have all been improved recently. Gemex''s owner and tech support personnel have actually traveled all over the world to make these corrections. Very few if any other equipment suppliers, will make such a deliberate and concerted effort for their customers.

As to consisitent ratings of each brand of stone, or type of stone, while I can''t disclose the details of my reports, I can say that there is variance in every brand of stone. As I''ve written a "zillion" times. DIAMONDS ARE UNIQUE AND AS SUCH THERE IS VARIANCE, EVEN IN THE SAME BRANDS. IN THE BROAD CONSIDERATION OF THE RESULTS, ALL OF THE FINER CUT STONES ALL ARE RATED WELL. BUT SOME ARE BETTER THAN OTHERS. While I could certainly request of former clients if I could publish their results publically, I believe that this wouldn''t be useful. To do this without far more interpretation of each test result and a narrative written or oral explanation of the results would be unfair to the sellers of each branded diamond.

There is another "problem" that is sort of consumer generated. Every one expects the stone they pick to get the maximum results of very high. There are plenty of very acceptable stones that grade high as well. Recently consumers have recognized this, and are a lot more flexible. Part of the interpretation of the light return results require more interpretation of each individual analysis, and analysis of the results of both the Analyzer as well as the viewer. In the early days of the BScope consumers felt really dissappointed if their stone submitted didn''t get the highest ratings, and they chose other stones, that weren''t necessarily better, and this did generate some "sour grapes" with sellers if their stones didn''t always get the ultimate grading.

Gemex was pressured by these sellers to have their stones rate differently. But Gemex didn''t give in to this. Instead research, study and a very concerted effort was made by them (and still is) to provide the most comprehensive report available.

The world changes everyday, and so does technology. To rest on one outdated standards potentially obsolete information, and not constantly try to improve or be more accurate isn''t what Gemex is about.

There is a vast difference between the attidudes of those selling vs. those who are analytical. This is why a lot of consumers have me review the results of testing, so that they get confirmation that the reusts of which are unbiased and impartial.

I will be posting the information of the stone that is coming as soon as it can be completed, maybe I will post results of the various tests as I do them.

Gemex also has a stone that is sort of their benchmark testing stone. After my machine was upgraded this stone was sent to me, and I tested it to confirm that my ratings were the same as the results from other machines they have tested it with.

My results were the same within a very close tolerance. It was mentioned in this thread that there is a question about a 5% difference in test results and how worthwhile the testing is. Keep in mind that the 5 % variance is about the greatest variance there is, and that if there is a variance it is far more commonly a lot less of a difference between machines.

For my own satisfaction, I do image just about every stone, whether the consumer wants the results or not. I do this for my own level of interest in how repeatible the results are, and to constantly observe any differences. I supppose this might be considered unessecesary "hair splitting" but I learn and improve my knowledge on an ongoing basis too.

Rockdoc

I have imaged many Eightstars, A Cut Aboves, Superb Certs and lots more branded and unbranded ones.

However, to release test results that I have done for consumers, would be a breach of confidentiality.

I can say this..

The Brilliance Scope is very repeatable. Yes, there is variation, but not much.

When a seller provides a B Scope and the consumer wants it verified, about 99% of the time the results are very close.

I have had a few occasions where there is a variance. When this happens, the information is transmitted to Gemex, and they immediately resolve the situation.

In one instance, the variance proved that either my B Scope or the sellers B Scope had a problem.

Gemex traveled to me to go over my machine, and also traveled personally and checked out the other machine. It was concluded that the other machine had a problem with the filters in it, and they of course corrected the situation.

I don''t know of any equipment company that makes such a concerted effort to keep things straight and accurate in the manner that Gemex does.

If there are changes that are observed the software is updated. Due to improvements in cutting and facet design, ignoring improvements would be negligent. Gemex is intensely supportive of the results the Bscope provides.

I am getting a stone this week, that is being sent by Gemex for research purposes. The results of this stone I will have permission to post, as it gets a very unusal result, even though the stone doesn''t have the "classic ideal proportions". This stone has been tested in various Bscope machines and appears to "break all the formerly assumed rules". The question is WHY? Gemex wants to know, and certainly I am also interested intensely as to the facts of why this is happening. Is this the exception to what we''ve learned so far? Is it that these proportions will consistently yield such incredible results? Can the WHY really be answered? I will have the stone next week and will begin an intensely made study on it. The results of this can be reported to the public.

Further opinion expressed by some sellers is not current. The camera, computer board, software, laser alignment have all been improved recently. Gemex''s owner and tech support personnel have actually traveled all over the world to make these corrections. Very few if any other equipment suppliers, will make such a deliberate and concerted effort for their customers.

As to consisitent ratings of each brand of stone, or type of stone, while I can''t disclose the details of my reports, I can say that there is variance in every brand of stone. As I''ve written a "zillion" times. DIAMONDS ARE UNIQUE AND AS SUCH THERE IS VARIANCE, EVEN IN THE SAME BRANDS. IN THE BROAD CONSIDERATION OF THE RESULTS, ALL OF THE FINER CUT STONES ALL ARE RATED WELL. BUT SOME ARE BETTER THAN OTHERS. While I could certainly request of former clients if I could publish their results publically, I believe that this wouldn''t be useful. To do this without far more interpretation of each test result and a narrative written or oral explanation of the results would be unfair to the sellers of each branded diamond.

There is another "problem" that is sort of consumer generated. Every one expects the stone they pick to get the maximum results of very high. There are plenty of very acceptable stones that grade high as well. Recently consumers have recognized this, and are a lot more flexible. Part of the interpretation of the light return results require more interpretation of each individual analysis, and analysis of the results of both the Analyzer as well as the viewer. In the early days of the BScope consumers felt really dissappointed if their stone submitted didn''t get the highest ratings, and they chose other stones, that weren''t necessarily better, and this did generate some "sour grapes" with sellers if their stones didn''t always get the ultimate grading.

Gemex was pressured by these sellers to have their stones rate differently. But Gemex didn''t give in to this. Instead research, study and a very concerted effort was made by them (and still is) to provide the most comprehensive report available.

The world changes everyday, and so does technology. To rest on one outdated standards potentially obsolete information, and not constantly try to improve or be more accurate isn''t what Gemex is about.

There is a vast difference between the attidudes of those selling vs. those who are analytical. This is why a lot of consumers have me review the results of testing, so that they get confirmation that the reusts of which are unbiased and impartial.

I will be posting the information of the stone that is coming as soon as it can be completed, maybe I will post results of the various tests as I do them.

Gemex also has a stone that is sort of their benchmark testing stone. After my machine was upgraded this stone was sent to me, and I tested it to confirm that my ratings were the same as the results from other machines they have tested it with.

My results were the same within a very close tolerance. It was mentioned in this thread that there is a question about a 5% difference in test results and how worthwhile the testing is. Keep in mind that the 5 % variance is about the greatest variance there is, and that if there is a variance it is far more commonly a lot less of a difference between machines.

For my own satisfaction, I do image just about every stone, whether the consumer wants the results or not. I do this for my own level of interest in how repeatible the results are, and to constantly observe any differences. I supppose this might be considered unessecesary "hair splitting" but I learn and improve my knowledge on an ongoing basis too.

Rockdoc

300x240.png)